The tech world has been buzzing about something called generative AI. These are computer programs that make new stuff instead of just analyzing existing information. They create brand new text, pictures, music, and videos that never existed before.

Table of Contents

Types of Generative AI include several different approaches like GANs, VAEs, and transformer models. Each works in its own way, but they all do the same basic thing: study existing content and then make similar but original new content. We’ve seen these tools pop up everywhere in 2025, from writing assistants to image creators that turn typed descriptions into artwork.

These systems work because they’ve studied massive amounts of existing content. A text generator has read millions of articles, books, and websites. An image creator has looked at countless photos and artwork. After all this studying, they can create new stuff that follows the patterns they learned. The results can be pretty amazing – sometimes it’s hard to tell if a human or computer made something!

What Are Generative Models?

Think of generative models as creative computer programs. They study tons of examples of something, like photos of dogs or written stories, and then try to make new examples that feel like they could have been part of the original set.

The easiest way to understand these models is to compare them with their cousins, discriminative models. A discriminative model looks at emails and sorts them into “spam” or “not spam” folders. But a generative model studies all those emails and then writes brand-new emails that look legitimate. One sorts things; the other creates things.

Today’s generative models make incredibly realistic images, videos, computer code, and written content. Some can even turn one type of content into another, like turning a written description into a picture. To do this magic trick, they use complicated math formulas (algorithms) that help them understand patterns in massive collections of training examples.

Also Read: Generative AI: Benefits, Use Cases, and Limitations

Types of Generative AI Models (Explained Simply)

1. Generative Adversarial Networks (GANs)

GANs are creative systems that work through competition between two parts: a creator and a critic. The creator makes fake content while the critic tries to spot what’s real and what’s fake. Through this back-and-forth contest, the creator gets better and better at making realistic content that can fool the critic. Think of it like a counterfeiter and detective constantly challenging each other, with the counterfeiter becoming more skilled with each attempt.

In simple words, GANs work like an art student and an art teacher constantly challenging each other. One part creates images while another part judges how realistic they look, pushing the creator to keep improving.

- Works through a contest between two computer systems – a “generator” that makes fake content and a “discriminator” that tries to spot the fakes

- The generator keeps getting better because it wants to fool the judge

- Creates incredibly realistic photos, especially faces and scenery

- Powers many photo editing apps, design tools, and face generators

- Sometimes struggles with unusual requests or complex scenes with multiple objects

2. Variational Autoencoders (VAEs)

VAEs are generative models that learn to compress information down to its essential features and then rebuild it. Unlike regular compression that just makes files smaller, VAEs learn what makes something what it is – the key patterns and structures. Once trained, they can create new examples by generating variations on these essential features. It’s similar to learning the “recipe” for something and then making new versions with slight changes to the ingredients.

In simple words, VAEs are like taking a photo, squishing it down to fit in a tiny file, and then unpacking it again. During this process, they learn what makes the image work, allowing them to create new, similar images.

- Has two main parts – one that compresses information and another that rebuilds it

- Creates a range of possible answers rather than one specific output

- Makes smoother, sometimes less detailed results compared to other methods

- Used in medicine for analyzing scans, drug development, and creating 3D models

- Better at handling incomplete information and allowing users to control specific features

3. Autoregressive Models

Autoregressive models generate content in sequence, one piece at a time, where each new piece depends on what came before it. They look at a series of existing elements and predict what should come next based on patterns they’ve learned. Like a musician who knows how to continue a melody after hearing the first few notes, these models can continue text, music, or other sequential data in a way that makes sense and maintains context.

In simple words, these models create content one piece at a time, like someone writing a story word by word or composing music note by note. Each new piece depends on what came before.

- Builds content step by step (like writing one word after another)

- Uses previous content to figure out what should come next

- Especially good at writing text that flows naturally from sentence to sentence

- Found in many writing assistants and chatbots on websites

- Can sometimes lose track in very long pieces of content

Also Read: Generative AI vs Predictive AI

4. Transformer-Based Models

Transformer models use a special attention mechanism that helps them understand relationships between different parts of data, even when those parts are far apart from each other. They excel at handling language because they can keep track of how words relate to each other throughout an entire document. This allows them to generate coherent, contextually appropriate content that remembers information from earlier in the sequence.

In simple words, Transformers revolutionized the AI world by introducing a system that helps the computer focus on the most important parts of information, much like how humans pay attention to what matters most in a conversation.

- Uses a clever “attention” system to focus on relevant information

- Can look at entire paragraphs at once instead of just one word at a time

- Powers most modern text generators and many text-to-image tools

- Generally works better when the model is larger and has been trained on more examples

- Forms the foundation of popular writing assistants and chat tools

5. Diffusion Models

Diffusion models work by gradually adding random noise to data (like an image) until it becomes completely noisy, then learning to reverse this process. To generate something new, they start with pure noise and gradually remove it step by step until a clear image (or other content) emerges. This approach is like a sculptor carefully removing material to reveal a figure, or watching a photograph slowly develop from a blurry beginning to a sharp final image.

In simple words, Diffusion models take a unique approach – they start with random static (like TV snow) and gradually clear up the picture until a clear image appears. It’s like watching a photograph slowly develop.

- Begins with random noise and gradually transforms it into clear images

- Currently makes some of the most detailed and realistic computer-generated pictures

- Allows users to give more specific guidance about what they want created

- Needs powerful computers, especially for high-quality images

- Behind many of the most impressive AI art tools that have gone viral online

6. Recurrent Neural Networks (RNNs) and LSTMs

RNNs are models designed to handle sequential data by maintaining a kind of memory of what they’ve seen before. They process information in loops, allowing information to persist. LSTMs (Long Short-Term Memory networks) are a special type of RNN that can remember information for longer periods, making them better at tasks requiring longer context. Think of them as readers who can remember earlier chapters while reading a book.

In simple words, RNNs and LSTMs are some of the older types of generative AI that work with sequential data. They process information step by step while keeping track of what came before, kind of like how you remember earlier words in a sentence to understand the whole meaning.

- Uses a loop-like structure that passes information from one step to the next

- Good at handling text, speech, and music where order matters

- LSTMs add special memory cells that help remember important information for longer

- Used in voice assistants, music generators, and some text tools

- Largely replaced by transformer models for many tasks, but still useful for certain applications

7. Flow-Based Models

Flow-based models use a series of reversible transformations to convert between complex data (like images) and simple distributions (like random noise). These transformations can be run forward to convert real data into noise or backward to generate new data from noise. They’re designed so that both directions are efficient to compute, making them useful for tasks where you need to go back and forth between the original and generated content.

In simple words, Flow-based models transform data back and forth between complex structures and simple patterns using steps that can be reversed. Think of them as translators that can convert complicated information into a simpler form and back again.

- Uses a series of reversible transformations that can run in either direction

- Provides exact calculations rather than approximations

- Good at tasks that require converting between different types of data

- Less common than other approaches, but valuable for specialized applications

- Examples include high-quality image generation and audio synthesis

Enroll Now: AI Marketing Course

Applications of Generative AI in Real Life

Generative AI has moved from research labs into everyday tools that people use for work and fun. These technologies now help with creating content, designing products, writing code, and solving problems across many industries.

1. Content Creation

The most visible use of generative AI happens in content creation. Writers use text generators to help draft articles, marketing copy, and social media posts. Video editors use AI to create rough drafts, generate captions, or even expand short clips into longer sequences. Podcasters use voice synthesis to fix audio mistakes without re-recording.

Many marketing teams now use these tools to generate first drafts of ads, emails, and product descriptions. Some news organizations use generative AI to create initial drafts of data-heavy stories like financial reports or sports recaps, which human editors then review and improve.

2. Design and Art

Designers increasingly use generative AI as a brainstorming partner. Interior designers can quickly generate room layouts based on space constraints. Fashion designers can create new pattern variations or visualize clothing on different body types. Product designers can rapidly prototype different versions of packaging or physical products.

Digital artists use these tools to generate base images that they can refine or transform their sketches into more detailed artwork. Some video game studios use generative AI to create background elements and textures or even generate basic game levels that developers can customize.

3. Software Development

Programmers now regularly use code-generating AI to speed up their work. These tools can suggest code completions, generate functions based on comments, or even convert pseudocode into working programs. Many developers use them to explain complex code, generate test cases, or debug problematic sections.

Web developers use generative AI to quickly create basic layouts, generate CSS for specific designs, or build simple animations. Mobile app developers can generate starter code for common features like login screens or data entry forms.

Enroll Now: Advanced Generative AI for Marketers

4. Healthcare and Science

Medical researchers use generative models to design molecules for potential new medicines. By generating thousands of possible compounds that might treat specific conditions, they can identify promising candidates much faster than traditional methods.

In radiology, generative AI helps create clearer medical images from lower-quality scans, reducing the need for repeat procedures. Some research teams also use these models to generate synthetic medical data for training purposes while protecting patient privacy.

5. Gaming and Entertainment

Video game developers use generative AI to create diverse character models, generate dialogue for background characters, or build procedurally generated worlds that feel unique for each player. Some games now use these tools to adapt stories based on player choices or generate unlimited new levels.

Movie and TV production teams use generative technology to create special effects, generate background crowds, or visualize scenes before filming. Music producers use AI to generate instrumental tracks, suggest chord progressions, or create backing vocals.

Also Read: AI for Market Research

Choosing the Right Type of Generative AI Model

Picking the right generative AI approach depends on what you’re trying to create, how much control you need, and what resources you have available. Here’s a simple breakdown of which types work best for different needs:

For Image Generation

- Diffusion Models: Best for high-quality, detailed images with specific guidance

- GANs: Good for realistic photos and quick generation

- VAEs: Useful when you need to control specific features or attributes

For Text Generation

- Transformer Models: Best for most text tasks, especially longer content

- Autoregressive Models: Good for structured text like code or formatted documents

- RNNs/LSTMs: Sometimes used for simple text generation with fewer resources

For Audio and Music

- Diffusion Models: Increasingly used for high-quality speech and music

- Autoregressive Models: Good for music generation note by note

- GANs: Used for voice conversion and audio style transfer

For 3D and Video

- Diffusion Models: Leading approach for video generation

- GANs: Good for motion transfer and face animation

- Transformers: Emerging as powerful video generators with the right architecture

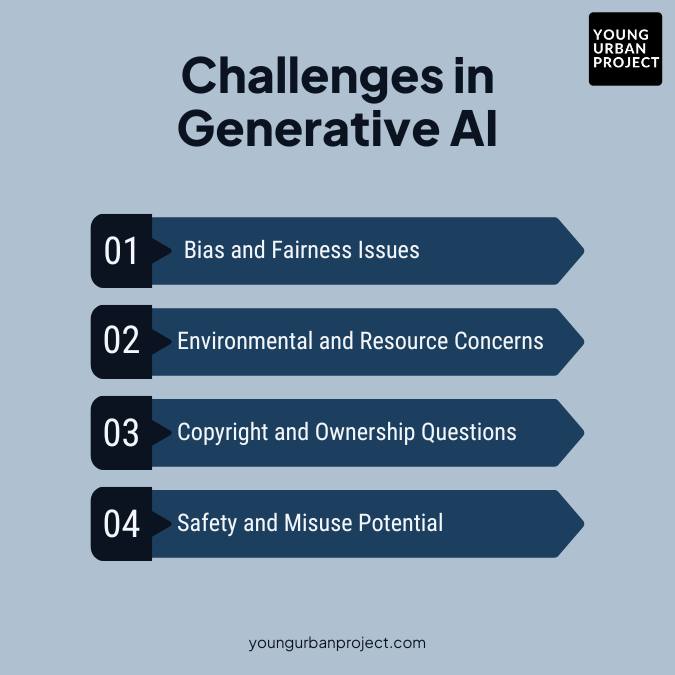

Challenges in Generative AI

Despite all the progress, generative AI still faces several important challenges that limit its use or raise concerns about its impact.

1. Bias and Fairness Issues

Generative models learn from existing data, which means they often pick up and amplify biases present in that data. Text generators might use stereotypical descriptions for certain groups. Image generators might create less diverse representations than requested. These biases can reinforce harmful stereotypes or lead to unfair treatment if not carefully addressed.

Many development teams now use techniques to measure and reduce bias in their models, but completely eliminating these problems remains difficult. Users need to carefully check AI outputs, especially when generating content about people or sensitive topics.

2. Environmental and Resource Concerns

Training large generative models requires enormous computing power, sometimes running hundreds of powerful computers for weeks. This process consumes significant electricity and produces carbon emissions. Even using already-trained models for generation can require substantial computing resources.

As these models grow larger and more complex, their environmental footprint increases. Some researchers now focus on creating more efficient models that can deliver similar results with less computing power.

3. Copyright and Ownership Questions

Generative AI learns from existing works, raising questions about copyright and intellectual property. When a model generates an image after learning from millions of artworks, who owns that new image? What happens when a text generator produces content similar to specific sources it trained on?

These questions remain partly unresolved, with ongoing legal cases and debates about fair use, attribution, and compensation for creators whose work was used in training. Some companies now offer models trained only on licensed content or public domain works to address these concerns.

4. Safety and Misuse Potential

Powerful generative tools can be misused to create convincing fake news, deepfakes, or other harmful content. As generation quality improves, it becomes harder to distinguish between authentic and AI-generated content.

Developers implement various safeguards to prevent harmful outputs, but determined users can sometimes work around these protections. Society continues to develop detection tools, policies, and education to address these risks.

Also Read: What is an LLM in Generative AI?

Conclusion

Generative AI represents one of the most significant technological shifts in recent years. These models can now create content that’s increasingly difficult to distinguish from human-made work, opening new possibilities across many fields.

The different types of generative models each have their strengths, from the competitive approach of GANs to the noise-removal technique of diffusion models. Understanding these differences helps in choosing the right approach for specific needs.

As these technologies continue to develop, we’ll likely see them become even more accessible and powerful. The models will generate higher quality content with less computing power and offer more control over the outputs. The line between human and machine creation will continue to blur.

The most effective use of generative AI will likely involve collaboration between humans and machines. The technology offers tremendous creative possibilities, but human judgment, ethics, and creativity remain essential to guide these tools toward their most beneficial applications.

FAQs: Types of Generative AI

1. What are the main types of generative AI models?

The main types include Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), Transformer-based models, Diffusion Models, Autoregressive Models, Recurrent Neural Networks (RNNs), and Flow-based Models. Each uses different approaches to learn patterns and generate new content.

2. How does a generative adversarial network (GAN) work?

GANs use two competing neural networks: a generator that creates content and a discriminator that evaluates how realistic that content looks. The generator tries to fool the discriminator, while the discriminator tries to correctly identify real versus fake content. Through this competition, the generator gets better at creating realistic outputs.

3. What’s the difference between transformer models and autoregressive models?

All transformer models used for generation are autoregressive (meaning they generate content sequentially), but not all autoregressive models use the transformer architecture. Transformers specifically use an attention mechanism that helps them understand relationships between different parts of the data, making them particularly effective for complex tasks.

4. Which generative AI model is best for image generation?

Currently, diffusion models (like those used in Stable Diffusion and DALL·E) produce the highest quality images with the most control. GANs can sometimes generate realistic images faster, while VAEs offer better control over specific features but often with less detail.

5. Are generative AI models used for creating videos or music?

Yes, generative AI is increasingly used for both video and music creation. Diffusion models can generate short videos from text descriptions. For music, specialized models can compose melodies, suggest chord progressions, or even generate complete tracks in specific styles.

6. What’s the role of large language models (LLMs) in generative AI?

Large language models are a subset of generative AI that focus on text. Based on transformer architectures, they can generate human-like text, translate languages, write different creative content formats, and answer questions. They’ve become some of the most widely used generative AI tools because of their versatility and ability to understand context.