Table of Contents

Overview

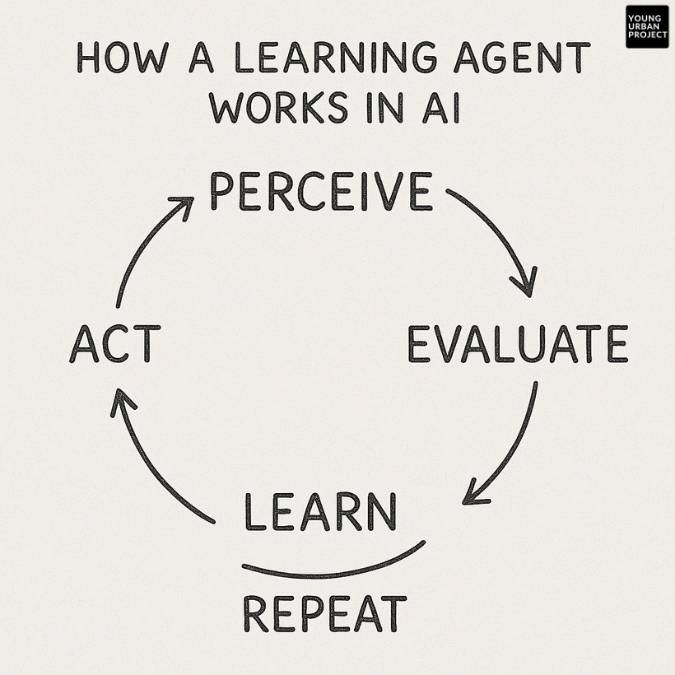

A learning agent in AI is a system that improves its performance by learning from experience. It observes the environment, evaluates outcomes, and updates its internal knowledge to make better decisions over time.

What is a Learning Agent in AI?

Let’s break this down without getting too caught up in buzzwords.

A learning agent in AI is a type of intelligent agent, which just means it’s a system designed to perceive its environment and take actions toward achieving a goal. What makes a learning agent different is that it doesn’t rely on a fixed set of rules. Instead, it adapts over time by learning from the outcomes of its actions.

So rather than saying, “If X happens, always do Y,” a learning agent starts with some logic (or maybe none at all), takes actions, and then uses feedback from the environment to figure out what works and what doesn’t. It improves through experience, just like humans do.

This sets it apart from other types of AI agents:

- Reflex agents: They react to inputs with hard-coded responses. Fast, but not adaptable.

- Model-based agents: These have an internal model of the world and make decisions based on that, but they don’t necessarily learn or adapt over time.

- Learning agents: These evolve. They’re dynamic. They’re not just running instructions, they’re adjusting based on what actually happens.

You’ll hear “learning agent” a lot in discussions around modern AI systems, especially those using machine learning or reinforcement learning. They’re a big reason why AI feels less static and more… well, intelligent.

Also read: What is Agentic AI?

How Does a Learning Agent Work in Artificial Intelligence?

Let’s go step-by-step, because while the concept is intuitive, the inner workings are worth digging into.

A learning agent typically has four key components, and each one plays a specific role:

- Learning Element

- Critic

- Performance Element

- Problem Generator

Here’s how they work together:

Step 1: The Performance Element takes action

This is the part of the agent that actually does things. It chooses actions based on what it currently knows. You could think of this like the “decision-maker.”

Step 2: The Critic gives feedback

Once the agent acts, the critic steps in. It looks at the results of the action and says, essentially, “Was that good or bad?” It evaluates performance based on a predefined goal or success metric.

Step 3: The Learning Element updates the agent

Using the feedback from the critic, the learning element tweaks the agent’s internal knowledge or strategy. Over time, it learns patterns, improves predictions, and hopefully starts making better decisions.

Step 4: The Problem Generator suggests new things to try

This might sound like a weird piece, but it’s crucial. The problem generator pushes the agent to explore, trying new actions it hasn’t before. This is what helps avoid getting stuck in a loop of doing the same thing just because it works “well enough.”

The full cycle looks something like this:

Perceive → Act → Evaluate → Learn → Explore → Repeat

Now, is this process always clean and efficient? Not really. Early attempts often include a lot of trial and error. Some actions fail. Some feedback is noisy. But that’s part of the learning loop, it’s designed to absorb messiness and still move forward.

Also Read: 10 Essential Skills to Build AI Agents

What Are the Main Components of a Learning Agent?

Let’s zoom in on those four parts we just touched on, because understanding them individually really helps make sense of how this whole system functions in real life.

| Component | What It Does |

| Learning Element | Learns from feedback and updates future behavior |

| Critic | Judges the agent’s action and provides evaluative feedback |

| Performance Element | Actually takes actions based on the current knowledge or strategy |

| Problem Generator | Introduces new actions to encourage exploration and discovery |

Let’s walk through each one quickly:

1. Learning Element

This is the “brain” that gets smarter. It doesn’t just memorize responses, it learns relationships and adjusts rules or predictions. It might rely on machine learning models or even neural networks in more advanced systems.

2. Critic

The critic is more like a teacher or coach than a judge. It tells the system whether it’s on the right track but doesn’t directly tell it what to do. It helps the learning element know what to reinforce or avoid.

3. Performance Element

This is what interacts with the environment, the outward-facing part. It’s also the piece that people or systems around it usually “see” working. When your spam filter blocks an email, this is the element in action.

4. Problem Generator

A bit underrated, honestly. The problem generator is what keeps the system from becoming lazy or overfitting to one narrow set of actions. It pushes boundaries by introducing risk and novelty, key ingredients in meaningful learning.

Together, these parts allow a learning agent to be more than a static tool. It becomes adaptive. And that’s a huge leap forward from the rigid systems we used to rely on.

Enroll Now: AI Marketing Course

What Types of Learning Do AI Learning Agents Use?

This is where it gets really interesting, because not all learning agents are created equal. Depending on how they learn from data or experience, they usually fall into one of three major learning categories:

1. Supervised Learning

Here, the agent is given a dataset that includes input-output pairs. It learns from examples where the “right” answer is already known.

Example: Image recognition tools trained to identify cats in photos. They’re shown thousands of labeled images (“this is a cat,” “this is not”) and learn patterns from there.

2. Unsupervised Learning

There are no labels in the data here. The agent just looks for patterns, clusters, or structures on its own.

Example: Grouping customers by behavior without knowing anything about their demographics. The AI just figures out commonalities and segments them naturally.

3. Reinforcement Learning (RL)

This is the most relevant when we talk about learning agents specifically. The agent takes actions, gets rewards (or penalties), and uses that feedback to improve.

Example: A robot learning to walk by trial and error, if it falls, it adjusts. If it stays upright longer, it reinforces those motions.

Quick recap:

| Learning Type | Feedback Type | Used By Learning Agents? |

| Supervised Learning | Labeled data | Sometimes |

| Unsupervised Learning | No labels | Occasionally |

| Reinforcement Learning | Rewards + penalties | Very often |

Not every learning agent uses all three types, but reinforcement learning is the one most closely associated with how learning agents interact with the real world.

Also read: Generative AI vs Predictive AI: Key Differences

Real-Life Examples of Learning Agents in AI Systems

Now let’s bring all that theory down to earth.

Learning agents aren’t just abstract concepts from AI textbooks. They’re working behind the scenes in technologies most of us interact with every day, or at least hear about constantly.

1. Self-Driving Cars (like Tesla Autopilot)

These vehicles learn from real-world traffic conditions, road layouts, driver interventions, and more. The system updates its performance over time based on what it “sees” and experiences on the road. That’s a learning agent in action, adapting with every mile.

2. AI in Games (e.g., AlphaGo, Dota bots)

Gaming AI agents learn how to beat opponents not by memorizing patterns but by improving strategies through trial and error. They observe how human players move, try different tactics, and refine their approach over thousands of simulated games.

3. Spam Filters (Gmail, Outlook)

Your email’s spam filter learns from what you mark as “spam” or “not spam.” Every time you manually correct it, that feedback helps the agent adjust and make better decisions going forward.

These examples are everywhere, and the common thread? Feedback loops. The systems get better by learning from real use, not just initial programming.

Also read: Knowledge-Based Agents in AI: The Ultimate Guide

Benefits of Using Learning Agents in AI

Okay, so what’s the big deal? Why not just build static rule-based systems?

Here’s why learning agents are a huge leap forward in AI:

1. Continuous Improvement

These systems don’t plateau. As long as there’s new data or new experiences, the agent keeps improving its decision-making.

2. Better Decision-Making Over Time

Because they adjust based on past successes (and mistakes), their future choices become more accurate, relevant, and efficient.

3. Adaptability to Dynamic Environments

Learning agents shine in environments that aren’t predictable. Whether it’s weather changes, shifting user behavior, or unexpected obstacles, they can adapt.

4. Less Human Intervention

Once trained well, these agents often require minimal oversight. That’s a major win in systems where scale or complexity makes manual tuning impossible.

Still, they’re not magical. And that leads us to…

Also read: Main Goal of Generative AI

Challenges in Designing a Learning Agent

Nothing in AI comes for free. Building effective learning agents takes serious thought and often, quite a bit of trial and error.

1. Data Quality & Quantity

Without good data, even the best-designed agent is basically flying blind. Garbage in, garbage out. And in some domains, getting enough quality data is a huge bottleneck.

2. Computational Resources

Learning, especially through reinforcement learning, can be computationally expensive. Training an agent to do a simple task might take hours (or days) on powerful machines.

3. Exploration vs Exploitation

This is a classic dilemma. Should the agent try new actions to discover better ones (exploration), or stick to what already works (exploitation)? Striking a balance isn’t easy, explore too much, and you waste time; too little, and you miss better solutions.

4. Safety in Decision-Making

In high-stakes environments (like healthcare or self-driving), a learning agent making mistakes isn’t just inefficient, it could be dangerous. So designers have to build in constraints or safety nets.

Also read: Rational Agents in AI: Working, Types and Examples

Learning Agent vs Other AI Agent Types

Here’s a simplified breakdown of how different types of AI agents compare in terms of learning ability, adaptability, and use of internal models:

| Agent Type | Learns | Adapts | Uses Model |

| Reflex Agent | No | No | No |

| Model-Based Agent | No | Yes | Yes |

| Learning Agent | Yes | Yes | Optional |

- Reflex Agents react based on predefined rules. No learning. Just stimulus → response.

- Model-Based Agents can adapt to different situations by using an internal model, but they’re not learning from experience.

- Learning Agents are the only ones here that genuinely evolve over time.

So if the goal is to build something that improves on its own? Learning agents are your go-to.

Top AI Tools & Libraries to Build Learning Agents

If you’re even mildly curious about building your own learning agents, or just want to understand what’s happening under the hood, these tools are worth checking out.

1. TensorFlow

Developed by Google, it’s a deep learning framework that’s great for building neural network-based agents.

2. PyTorch

Created by Meta, it’s incredibly flexible and developer-friendly. Especially useful for custom reinforcement learning implementations.

3. OpenAI Gym

Think of this like a playground for training and testing RL agents. It gives you environments like games, simulations, and control tasks.

4. Stable Baselines3

A library built on top of PyTorch that offers ready-to-use implementations of popular RL algorithms. Huge time-saver.

How Are Learning Agents Used in Real-World AI Applications?

It’s not just theory, companies are actively using learning agents to drive real value.

1. Tesla Autopilot

Tesla’s self-driving software gets smarter over time by collecting massive amounts of driving data. It learns from human drivers, sensor feedback, and millions of real-world scenarios.

2. Amazon

Their recommendation system is powered by agents that adapt to each user’s behavior. What you browse, buy, skip, it all feeds into how future suggestions are made.

3. Google Search

Google constantly tweaks its results based on what people click, how long they stay on a page, and what they ignore. That’s learning, even if it feels invisible.

Also Read: Top 10 AI Agent Frameworks to Build Smarter AI

Can Learning Agents Improve Over Time Without Human Help?

Yes, and also no. Let me explain.

1. Self-learning is possible

Many agents improve through interaction alone, without direct human labeling or rules. That’s what reinforcement learning is all about.

2. But boundaries are still needed

Completely autonomous learning can go wrong fast if the system misinterprets goals or feedback. That’s why many real-world learning agents still operate under human supervision, or with safety protocols in place.

3. Active vs Passive Learning

Some agents actively seek out new experiences to learn from (active learning), while others passively wait for more data (passive learning). Both have their place, depending on the context.

It’s tempting to think of learning agents as totally hands-off. In reality, they still need guide rails, at least for now.

Also Read: Reactive vs Proactive AI Agents

Future of Learning Agents in Artificial Intelligence

Looking ahead, learning agents are positioned to power some of the biggest advances in AI.

1. Healthcare

Imagine agents that personalize treatment plans based on how patients respond to medication, without needing constant reprogramming.

2. Space Exploration

Autonomous rovers or satellites are learning how to adapt to unknown terrains or conditions far from Earth.

3. Robotics

Robots that don’t just follow orders, but adapt and improve how they carry out tasks in the real world – cleaning, delivery, caregiving, etc.

By 2030 and beyond, we’ll likely see learning agents becoming more embedded in our daily lives, quietly improving the tech around us in ways we don’t even notice.

Key Features of Learning Agents in AI

Here are the top 5 characteristics that define a learning agent:

- Adaptability – Learns from new data and changing environments

- Feedback-driven – Improves based on evaluation from the critic

- Exploratory behavior – Tries new strategies, not just what’s safe

- Performance-enhancing – Gets better at achieving goals over time

- Goal-oriented – Always optimizing for a success metric

Real Brand Use Cases: Learning Agents in Action

- DeepMind’s AlphaGo – Learned Go strategy by playing millions of rounds

- Tesla FSD – Continuously improving with real-time driving data

- Netflix – Learns from viewer actions to suggest better content

Summary Box

- Learning agents are AI systems that learn and evolve from experience

- Core components: learning element, critic, performance element, problem generator

- Used in Tesla, Netflix, AlphaGo, and many AI systems

- They improve decisions, adapt over time, and reduce need for human updates

- Reinforcement learning is key to their success

FAQs: Learning Agent in AI

Q1. What is a learning agent in AI?

A learning agent is an AI system that improves its performance by learning from experiences and feedback from the environment.

Q2. What are the components of a learning agent?

They include a learning element, critic, performance element, and problem generator, each helping the agent learn, evaluate, act, and explore.

Q3. Is reinforcement learning part of learning agents?

Yes. Reinforcement learning is one of the main methods learning agents use to improve through trial, error, and rewards.

Q4. Where are learning agents used?

They are used in autonomous vehicles, recommendation engines, games, and robotics to adapt and improve over time.

Q5. How do learning agents differ from other agents?

Unlike reflex or model-based agents, learning agents evolve by analyzing past actions and outcomes to make smarter future decisions.